DATA SECURITY ANDINTEGRITY IN CLOUD COMPUTING

Abstract

Cloud computing is a technique that gets used by several users in business, organizations and other non-official works. By using cloud services the data along with the services can be managed very easily. Higher efficiency can be obtained in the process with less effort on the work.

Cloud is mainly used a storage area, since there are increase in data of an organization due to development in the organization. To reduce the storage issue in business sector cloud computing is used. Providing security to the data and its integrity is very important in cloud storage application.

To maintain the accuracy of data in the storage location integrity is very important and the issues faced in integrity and the techniques to improve the performance of integrity is given. The data have to be kept with highly secure manner and issues faced due to security problems along with the model for security is given in this document.

Keywords: Cloud computing, data security, data integrity.

Literature Review

Distributed computing currently is all over. As a rule, clients are utilizing the cloud without realizing they are utilizing it. As indicated by specialists little as well as moderate associations shall move to distributed computation since it may bolster quick permit to the applications and decrease the expense of the framework[1].

Cloud processing isn’t just the specialized arrangement yet in addition a plan of action which registering force may be sold as well as leased. Distributed computation is centered on conveying administrations. Association information are facilitated in the this process.

The responsibility for is diminishing where spryness and the responsiveness are expanding. Associations currently were attempting to abstain from concentrating on information technology foundation. It has to concentrate on the business procedure to build benefit. Accordingly, the significance of distributed computing is expanding, turning into a colossal market and getting a lot of consideration from the scholastic and modern networks.

They used a distributed computation as the model for empower omnipresent, and beneficial, on-request arrange access to a common pool of configurable registering assets (e.g., system,server, stock pilings, application, and administration) that can be quickly provisioned and discharged with insignificant administration exertion or specialist organization collaboration.

The definition of cloud computation can be straightforward. Its model is made out of 5 fundamental attributes, 3 assistance model, and 4 arrangement model. In this innovation clients re-appropriate the information to a servers their access that is controlled by a supplier.

What’s more, memory, processor, transmission capacity and capacity are imagined and can be gotten to by a customer utilizing the Internet. Distributed computing is made out of numerous innovations, for example, administration arranged design, virtualizations, and that’s only the tip of the iceberg.

There are numerous security issues with distributed computing. Be that as it may, the cloud is required by associations because of the requirement for plentiful assets to be utilized popular and the absence of enough assets to fulfill this need[2]. Additionally, distributed computing offers profoundly efficient information recovery and accessibility. Cloud suppliers are assuming the liability of asset improvement.

1. Introduction

Cloud computing uses internet as a bridge between the user and the cloud storage. Cloud provides several resources to the user according to the needs and the service provided by cloud is cost-effective[3]. This can be used by several users easily because the payment is done only based on the service that is used by the user. Cloud computing provides different types of models and services to the user and user can use any type of services as per their needs. Several technologies are also involved in cloud computing process.

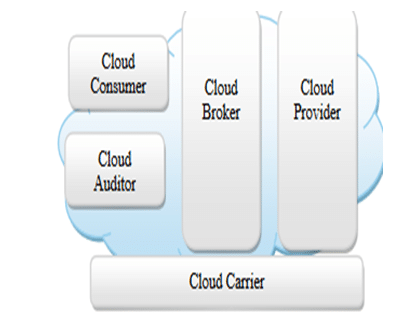

Fig 1: Basic cloud computing structure[2].

In this document the issues in data security and integrity that are faced in cloud computing is given. The techniques to solve the issues and to provide high performance, reliability and efficiency to the data in cloud.Some of the services like PaaS, IaaS, and SaaS are used by cloud.

Data security

The important factor in every computing aspects is security. Security plays an important role in cloud storage since the entire information including the sensitive data is stored in cloud[4].To protect those datasecurity has to be provided.

Even though cloud computing is easy and is used by several users there are some security issues in cloud. Security is used for securing the data and to safeguard the services in the storage.

An information security system for distributed computing systems is proposed The creators essentially talked about the security issues identified with cloud information stockpiling.

The traditional architecture for security on cloud is not used by many of the users and so new security techniques are used. If the user stores the data in a remote location then there will be no control of data and it can be lost easily. The biggest issue is providing the control of cloud to an untrusted party of cloud service. There are also some inner vulnerabilities that affect the data reliability and the processing power of the client.The data that are not used frequently by the user may be deleted by the untrusted party[4].

Data security models

The data in the cloud storage has to be protected from attacks and for protecting data some of the technologies along with the controls for it is designed.

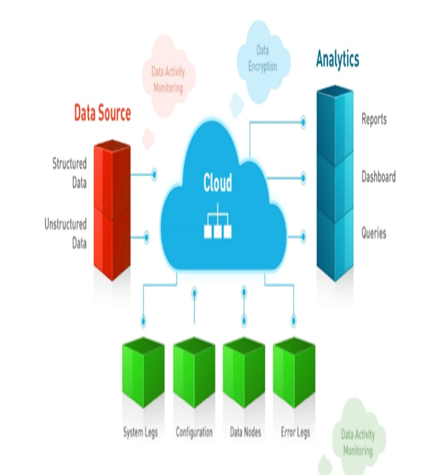

Fig 2: security system for cloud computing[5].

The above diagram gives the security development and maintaining process in cloud computing.

Security domains and subcategories are identified: With various classes of risk the applications and the other services with the infrastructure of physical along with logical domain is identified. The identification of physical infrastructure involves the facilities of the data center along with the components in the hardware through which services and networks get supported[5].

The networks with routers, OS instances and the data storage which are in the unstructured format are identified in the process of logical infrastructure.

Security in physical infrastructure: The important operations like routers, devices that used for storage, servers in the network and other components related to cloud has to be secured physically. The data has to be monitored, controlled and also has to manage the data in cloud. These are some process which should be done in security process. The physical access has to be monitored frequently and protection to information from natural disaster and other problems has to be provided.

Data security: There are different types of data like sensitive data, moderate data and non-sensitive data. The location of the data can be at different locations and the data can also be used in transferring the data to the networks that are external. The data has to be encrypted before storing it and transferring to other network or different points.

Accessing the management and identity: The main function of this is to allocate the works for logical access based on the functions that are required to the job. With the requirements of the business that are identified is the basis of the identity management along with this process the access which are requested for authentication andauthorization is done[6]. Cloud resources can be accessed safely by using the authentication process.

Data integrity

The accuracy for the data has to be assured and the consistency has to be provided to the data throughout the life cycle of the data. This process of providing accuracy to data is done by data integrity process. Data integrity in remote areas can be ensured by using the model proof of retrivabilty.Information respectability is the most basic components in any data framework.

For the most part, information respectability implies shielding information from unapproved erasure, alteration, or creation. Dealing with element’s permission and rights to explicit Endeavour assets guarantees that important information and administrations are not manhandled, misused, or taken. Information uprightness is effectively accomplished in an independent framework with a solitary database.

Information uprightness in the independent framework is kept up by means of database imperatives and exchanges, which is generally wrapped up by a database the board framework -DBMS. Exchanges ought to follow ACID -atomicity, consistency, detachment, and sturdiness properties to guarantee information honesty. Most databases bolster ACID exchanges and can protect information trustworthiness[7].

The main process of this model is to provide error correction method and spot checking process is done. The static data is focused in this process and the distributed systems are also using this model. A small change in the data is made corrected by using the error correction method and the data is made shuffled[8]. Dispersed registering requires broad security plans subject to various pieces of an enormous and vaguely planned structure.

The application programming and databases in conveyed processing are moved to the consolidated colossal server ranches, where the organization of the data and organizations may not be totally trustworthy.

Perils, vulnerabilities and threats for dispersed processing are explained, and a short time later, we have organized an appropriated registering security improvement lifecycle model to achieve prosperity and engage the customer to misuse this advancement anyway much as could be anticipated from security and face the risks that may be introduced to data.

A data dependability checking count; which gets rid of the untouchable examining, is uncovered to shield static and dynamic data from unapproved observation, change, or impedance.

There are a couple of novel implications of disseminated processing, yet all of them agree on the most ideal approach to offer sorts of help to customers of the framework. Disseminated registering is an Internet-based new development and usage of PC advancement.

It implies the use of figuring resources; hardware and programming, available on demand as an assistance over the Internet. It offers an extent of organizations for customers of the framework, which join applications, amassing, and various undertakings and remote printing, etc. It usually incorporates over the Internet course of action of continuously versatile and consistently virtualized resources .

Associations are running a wide scope of uses in the cloud. Disseminated registering can be considered as the advancement that keeps the data, uses in different applications and is remotely controlled without the need to download certain applications on PCs.

A segment of the potential focal points that apply to for all intents and purposes a wide scope of appropriated registering consolidates the going with: Cost Savings: Companies can diminish their capital expenses and use operational expenses for growing their figuring limits. Versatility: The flexibility of conveyed processing licenses associations to use additional benefits in top events, to enable them to satisfy purchaser demands. Immovable quality: Services using multi-overabundance districts can support business congruity and disaster recovery.

Decrease Maintenance: Cloud authority centers do the structure upkeep that doesn’t require application foundations onto PCs. Convenient Accessible: Mobile workers have extended effectiveness due to structures open in an establishment available from wherever. Straightforwardness: Additional servers to be added to the provisioned organization without meddling with the organization or requiring reconfiguration of the application movement course of action.

In case the application movement course of action is fused by methods for an organization API, by then straightforwardness is moreover practiced through the robotized provisioning and de-provisioning of benefits. Information security can be viewed as including three limits: Access control, secure exchanges, and affirmation of private data. Information security is moreover described as the affirmation of private data and getting ready from unapproved recognition, change, or check.

Distributed computing has been considered as the nextgeneration engineering of IT Enterprise. Distributed computing moves the application programming and databases to the incorporated enormous server farms, where the administration of the information and administrations may not be completely reliable. This new worldview makes numerous new security challenges.

It delineates Cloud Data Storage Model . A distributed computing is comprised of a few components: Cloud clients, cloud specialist co-ops, third equality inspectors. Every component assumes a particular job in conveying practical cloud-based application. a. Cloud Users: The clients can be an individual or an association putting away their information in cloud and getting to the information. He can utilize: • Mobile including PDAs and PDAs • Thin, where the customer’s PC doesn’t have hard drive and the work is finished by the server.

Slender customer becomes well known in light of low equipment cost and since information is put away on servers there is less possibility for information to be lost or taken • Thick, utilizes ordinary PC that utilizes an internet browser to associate with the cloud. b. Cloud Service Providers (CSP): The CSP, who oversees cloud servers (CSs) and gives a paid extra room on its framework to clients,. Servers are geologically housed on various areas.

The servers on distributed computing depends on the standard of virtual servers in light of the fact that the client doesn’t know which server will give him the necessary assistance.

This is the primary contrast between distributed computing and appropriated processing. c. Datacenters: An assortment of servers structure the server farm, where the application is housed. Cloud server farms are known as cloud information stockpiles (CDSs). With them again however without adjustment. Model: Data Centers. ii. Dynamic information: It is the information gotten by the alteration or change persistently which are utilized in move between clients on distributed computing.

Distributed computing Service Models

The cloud specialist organization (CSP) offered its clients with sort of administrations and apparatuses, which are: a. Programming as a Service (SaaS): includes utilizing their cloud foundation and cloud stages to furnish clients with programming applications.

In this administration, the client can exploit all applications. The end client applications are gotten to by clients through an internet browser, for example, Microsoft SharePoint Online. The requirement for the client to introduce or keep up extra programming is disposed of Platform as a Service (PasS): empowers clients to utilize the cloud foundation; as an assistance in addition to working frameworks and server applications, for example, web servers.

The client can control the improvement of web b. Cloud Service Providers (CSP): The CSP, who oversees cloud servers (CSs) and gives a paid extra room on its framework to clients. Servers are geologically housed on various areas. The servers on distributed computing depends on the guideline of virtual servers in light of the fact that the client doesn’t know which server will give him the necessary help.

This is the primary distinction between distributed computing and conveyed registering. Datacenters: An assortment of servers structure the server farm, where the application is housed. Cloud server farms are known as cloud information stockpiles (CDSs).

The product can be introduced on one physical server and permitting numerous occurrences of virtual servers to be utilized. The quantity of virtual servers relies upon the size and speed of physical server and what application will be running on the virtual server. The information on the distributed computing can be either static or dynamic I.

Static information: It is information that can’t be adjusted or altered them and any revision thereto will turn into the new information and this information can be perused and re-think of them again however without change. Model: Data Centers. Dynamic information: It is the information acquired by the adjustment or change persistently which are utilized in move between clients on distributed computing.

Distributed computing Service Models

The cloud specialist co-op (CSP) offered its clients with sort of administrations and devices, which are: a. Programming as a Service (SaaS): includes utilizing their cloud foundation and cloud stages to furnish clients with programming applications. In this administration, the client can exploit all applications. The end client applications are gotten to by clients through an internet browser, for example, Microsoft SharePoint Online.

The requirement for the client to introduce or keep up extra programming is wiped out . b. Stage as a Service (PasS): empowers clients to utilize the cloud framework; as an assistance in addition to working frameworks and server applications, for example, web servers.

The client can control the improvement of web applications and other programming and which utilize a scope of programming dialects and apparatuses that are bolstered by the specialist co-op . c. Framework as a Service (IaaS): the enrolled client may access to physical processing equipment; including CPU, memory, information stockpiling and system availability of the specialist co-op. IaaS empowers numerous clients alluded to as “different occupants” utilizing virtualization programming.

The client increases more noteworthy adaptability in access to essential framework . Security as a help (Seas): order the various kinds of Security as a Service and to give direction to associations on sensible usage rehearses.

There are some issues in data integrity and they are:

Manipulation: The users of cloud storage will have more number of files and they used to store it in the cloud. For managing this SaaS service of cloud is used. The user will not use all the data frequently, some data will be used often and some will be used rarely.

Thus the cloud will move the data to a remote cloud which is an outsourced area. This is an area which cannot trusted and the data will not be secured in those areas. The data may get changed by any process and apart from this there will be some errors in the administrative process through which the data may get damaged or lost[9].

Untrusted remote server: Some of the computations based on incentive actions are involved in cloud computing process other than storage. This is used for performing the work or tasks in the processing part of cloud. If there is no proper security and transparency in cloud service then there will be no proof for the computation integrity process[8].

Some of the techniques in data integrity for improving the effectiveness in data integrity is given below:

PDP: The abbreviated form of PDP is provable data possession. Data integrity in remote areas is assured by this process[10]. Without retrieving the original data the user can be able to verify by using PDP. In this method to get the original data key is provided. Even small changes in data can be found easily.

PDP based on Message Authentication Code:By using MACin PDP the files stored in cloud can be easily ensure the process of data integrity. This technique is very easy and it is a secure way for handling data[11].

HAIL:High availability and integrity layer is the expanded form of HAIL. In this method the data is stored in several locations and hence there will be data redundancy which helps to ensure the integrity of data easily.

Authentication codes to messages, pseudo random functions and hash functions are used in HAIL process that helps in data integrity ensuring process[12]. This method generates proof and the size of the proof is compact as well as it is independent in its size.

POR for large files: With the use of a single key the file can be used but the key is based on the files size[13]. From the files users can be able to use only a little part from the file. That part which is used by the user will be an independent part of file. Sentinels are used in this method and these sentinels will be located in the blocks but in the hidden format and the data blocks gets randomly embed by the sentinels[14].

RSA and MD5

By using the partial homomorphic in RSA and the cryptography of MD5 the data security and integrity on cloud is done. RSA algorithm is used for authentication purpose which will use the technique of encryption and decryption to authenticate the process which provides the security.

Partial homomorphic in RSA: In this process homomorphism is used in a multiplicative manner[15]. To obtain the result of decryption two different cipher text of RSA is get multiplied which will be providing an equivalent result with the values obtained from multiplying two plaintext. Key generation, encryption and decryption are the three steps taken to secure the data.

Algorithm of MD5 hashing: This is a function based on calculations and it also termed to be algorithm of message digest. Three types of operations are taken place in MD5 algorithm[16].

- Bitwise Boolean operation

- Modular addition

- Operation based on cycle shift

By using the process of padding and compression this method is done. Padding process breaks the message which is given as input to it into 512 blocks of bits .

As a result of these to process which gets implemented in a public cloud the process for obtaining the result is:

Encryption: As a first step encryption is done. The keys for public and private are generated while encryption is done and these keys will help to decrypt the message. Along with the message which is encrypted the key is also provided in the cloud. This process will provide the user identity in the cloud service[17]. The file is encrypted and as a result of this the key for decryption is obtained.

Decryption: This is done at the receiver end after the message gets received by the end user. For performing this task the private key that is generated during encryption is used.

Data uploading: After the process of encryption the files are put in the cloud and the details of the files are provided.

Conclusion

Cloud computing is one of the technology that is being used by many of the users in recent days. Though it is used by several users the need of security for the data and integrity is very essential. In this report the issues that are occurred in the part of security and integrity process is provided in detail.

The technologies for improving the process of security and integrity are given[18]. By using the appropriate technique for the process the data and the sensitive information can be kept with high security. This also will provide high performance and efficiency to the user.

References

| [1] | S. C. G. Gaurav Pachauri, “ENSURING DATA INTEGRITY IN CLOUD DATA STORAGE,” International Journal of Innovative Science, Engineering & Technology, vol. 1, no. 1, pp. 53-58, 2014. |

| [2] | P. H. R. P. J. P. Ravi Kumar*a, “Exploring Data Security Issues and Solutions in Cloud Computing,” 6th International Conference on Smart Computing and Communications,, pp. 691-697, 2017. |

| [3] | M. A. H. Monjur Ahmed, “CLOUD COMPUTING AND SECURITY ISSUES IN THE CLOUD,” International Journal of Network Security & Its Applications, vol. 6, no. 1, pp. 25-36, 2014. |

| [4] | W. A. Sultan Aldossary, “Data Security, Privacy, Availability and Integrity in Cloud Computing: Issues and Current Solutions,” (IJACSA) International Journal of Advanced Computer Science and Applications, , vol. 7, no. 4, pp. 485-498, 2016. |

| [5] | N. S. NEDHAL A. AL-SAIYD, “DATA INTEGRITY IN CLOUD COMPUTING SECURITY,” Journal of Theoretical and Applied Information Technology, vol. 58, no. 3, pp. 570-581, 2013. |

| [6] | P. J. V. J. Yogita Gunjal1, “Data Security And Integrity Of Cloud Storage In Cloud Computing,” International Journal of Innovative Research in Science, Engineering and Technology, vol. 2, no. 4, pp. 1166-1170, 2013. |

| [7] | A. K. S. Neha Thakur, “Data Integrity Techniques in Cloud Computing: An Analysis,” International Journals of Advanced Research in Computer Science and Software Engineering, vol. 7, no. 8, pp. 121-125, 2017. |

| [8] | P. Priyanka Ora, “Data Security and Integrity in Cloud Computing,” IEEE International Conference on Computer, Communication and Control , 2015. |

[9]Aldossary, S. and Allen, W., 2016. Data security, privacy, availability and integrity in cloud computing: issues and current solutions. International Journal of Advanced Computer Science and Applications, 7(4), pp.485-498.

[10] Sukumaran, S.C. and Mohammed, M., 2018. PCR and Bio-signature for data confidentiality and integrity in mobile cloud computing. Journal of King Saud University-Computer and Information Sciences.

[11] Xu, X., Liu, G. and Zhu, J., 2016, October. Cloud data security and integrity protection model based on distributed virtual machine agents. In 2016 International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery (CyberC) (pp. 6-13). IEEE.

[12] Mahamane, P.L. and Patil, S.A., 2017. Cloud Data Security and Integrity using Sack of Cryptographic Algorithms through Trusted Third Party (TTP). IJETT, 1(2).

[13] Selvan, A.M. and Sujaritha, M., 2016. A survey on data security and integrity in cloud computing. International Journal of Advanced Research in Computer Science, 7(4).

[14] Mahmood, G.S., Huang, D.J. and Jaleel, B.A., 2019. Achieving an Effective, Confidentiality and Integrity of Data in Cloud Computing. IJ Network Security, 21(2), pp.326-332.

[15] Singh, S., Scholar, M.T., Nafis, T. and Sethi, A., 2017. Cloud Computing: Security Issues & Solution. vol, 13, pp.1419-1429.

[16] Udendhran, R., 2017, March. A hybrid approach to enhance data security in cloud storage. In Proceedings of the Second International Conference on Internet of things, Data and Cloud Computing (pp. 1-6).

[17] Pitchai, R., Babu, S., Supraja, P. and Anjanayya, S., 2019. Prediction of availability and integrity of cloud data using soft computing technique. Soft Computing, 23(18), pp.8555-8562.

[18] Hong, J., Wen, T., Guo, Q., Ye, Z. and Yin, Y., 2019. Privacy protection and integrity verification of aggregate queries in cloud computing. Cluster Computing, 22(3), pp.5763-5773.