Python Image Classification

Get the best assignment sample on Python Image Classification.

Introduction

At any point unearthed a dataset or a picture and contemplated whether you could make a strategy that could separate or perceive the picture? With that, picture grouping will help us. Picture Order is one of PC vision’s most blazing applications and an unquestionable requirement know term for anybody wishing to assume a part in this field. We can see a fundamental yet generally utilized application in this article, which is Picture Order. We cannot just perceive how to recognize the information with a reasonable and effective model, yet in addition figure out how to apply a pre-prepared model and think about the consequences of the two. You will actually want to discover a dataset before the finish of the article and actualize picture arrangement easily.

Steps involved in this Model:-

- Python programming

- Keras and its modules

- Basic understanding of Image Classification

- Convolutional Neural Networks and its implementation

- Basic understanding of Transfer learning

Image Classification

Picture Arrangement is the errand of distributing one imprint from a characterized set of classes to an information picture. This is one of PC Vision’s fundamental concerns, which, notwithstanding its effortlessness, has a wide scope of down to earth applications. To all the more likely comprehend, we should take an occasion. Our gadget can acquire a picture as info when we perform picture order, for example a Feline. The machine will presently know about an assortment of classifications and its motivation is to dole out the picture a class. This issue may appear to be straightforward or simple to settle, however the machine has a troublesome issue to address. The machine sees, as you would know, a lattice of numbers and not the image of a feline through our eyes. Pictures are three-dimensional varieties of 0 to 255 numbers, the size of which is Width x Stature x 3. The 3 mirrors the three red, green and blue shading organizations. Be that as it may, how does our machine get familiar with this image to distinguish? By using Neural Convolutional Organizations. Convolutionary neural organizations or CNN’s are a class of neural organizations in profound discovering that are a meaningful step forward in picture acknowledgment. At this point, you may have an unmistakable comprehension of CNN’s, and we realize that CNN’s are comprised of convolutionary layers, Reel layers, pooling layers, and thick layers that are totally connected.

Step 1:- Import the required libraries

We will utilize the Keras library here to construct our model and train it. To imagine our dataset, we additionally use Matplot lib and Seaborn to acquire a superior comprehension of the pictures we will be treating. Opencv is another critical library for taking care of picture records.

import matplotlib.pyplot as plt

import seaborn as sns

import keras

from keras.models import Sequential

from keras.layers import Dense, Conv2D , MaxPool2D , Flatten , Dropout

from keras.preprocessing.image import ImageDataGenerator

from keras.optimizers import Adam

from sklearn.metrics import classification_report,confusion_matrix

import tensorflow as tf

import cv2

import os

import numpy as np

Step 2:- Loading the data

Then, we should characterize our information way. How about we characterize a capacity called get_data() that makes it simpler for us to build our dataset for preparing and approval. We portray the two ‘Rugby’ and ‘Soccer’ names that we use. For this situation, we utilize the Opencv highlight to peruse the pictures in the RGB design and resize the pictures to our ideal width and tallness, the two of which are 224.

labels = [‘rugby’, ‘soccer’]img_size = 224def get_data(data_dir): data = [] for label in labels: path = os.path.join(data_dir, label) class_num = labels.index(label) for img in os.listdir(path): try: img_arr = cv2.imread(os.path.join(path, img))[…,::-1] #convert BGR to RGB format resized_arr = cv2.resize(img_arr, (img_size, img_size)) # Reshaping images to preferred size data.append([resized_arr, class_num]) except Exception as e: print(e) return np.array(data)Now we can easily fetch our train and validation data.train = get_data(‘../input/traintestsports/Main/train’)val = get_data(‘../input/traintestsports/Main/test’)

Step 3:- Visualize the data

Let’s imagine our knowledge to see what we are dealing with exactly. In both groups, we use Seaborn to plot the number of images and you can see what the production looks like.

l = []for i in train: if(i[1] == 0): l.append(“rugby”) else l.append(“soccer”)sns.set_style(‘darkgrid’)sns.countplot(l)

Output

Let us also visualize a random image from the Rugby and Soccer classes:-

plt.figure(figsize = (5,5))plt.imshow(train[1][0])plt.title(labels[train[0][1]])

Similarly for Soccer image:-

plt.figure(figsize = (5,5))plt.imshow(train[-1][0])plt.title(labels[train[-1][1]])

Step 4:- Data Pre-processing and Data Augmentation

Next, we perform some Data Pre-processing and Data Augmentation before we can proceed with building the model.

x_train = []y_train = []x_val = []y_val = [] For feature, label in train: x_train.append(feature) y_train.append(label) For feature, label in val: x_val.append(feature) y_val.append(label) # Normalize the datax_train = np.array(x_train) / 255x_val = np.array(x_val) / 255 x_train.reshape(-1, img_size, img_size, 1)y_train = np.array(y_train) x_val.reshape(-1, img_size, img_size, 1)y_val = np.array(y_val)

Data augmentation on the train data:-

datagen = ImageDataGenerator( featurewise_center=False, # set input mean to 0 over the dataset samplewise_center=False, # set each sample mean to 0 featurewise_std_normalization=False, # divide inputs by std of the dataset samplewise_std_normalization=False, # divide each input by its std zca_whitening=False, # apply ZCA whitening rotation_range = 30, # randomly rotate images in the range (degrees, 0 to 180) zoom_range = 0.2, # Randomly zoom image width_shift_range=0.1, # randomly shift images horizontally (fraction of total width) height_shift_range=0.1, # randomly shift images vertically (fraction of total height) horizontal_flip = True, # randomly flip images vertical_flip=False) # randomly flip images datagen.fit(x_train)

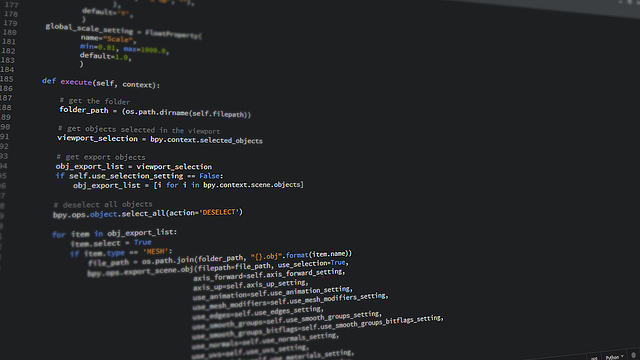

Step 5:- Define the Model

Let’s define a simple CNN model with 3 Convolutional layers followed by max-pooling layers. A dropout layer is added after the 3rd maxpool operation to avoid overfitting.

model = Sequential()model.add(Conv2D(32,3,padding=”same”, activation=”relu”, input_shape=(224,224,3)))model.add(MaxPool2D()) model.add(Conv2D(32, 3, padding=”same”, activation=”relu”))model.add(MaxPool2D()) model.add(Conv2D(64, 3, padding=”same”, activation=”relu”))model.add(MaxPool2D())model.add(Dropout(0.4)) model.add(Flatten())model.add(Dense(128,activation=”relu”))model.add(Dense(2, activation=”softmax”)) model.summary()

Let’s compile the model now using Adam as our optimizer and SparseCategoricalCrossentropy as the loss function. We are using a lower learning rate of 0.000001 for a smoother curve.

opt = Adam(lr=0.000001)model.compile(optimizer = opt , loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True) , metrics = [‘accuracy’])

Now, let’s train our model for 500 epochs since our learning rate is very small.

history = model.fit(x_train,y_train,epochs = 500 , validation_data = (x_val, y_val))

Step 6:- Evaluating the result

We will plot our training and validation accuracy along with training and validation loss.

acc = history.history[‘accuracy’]val_acc = history.history[‘val_accuracy’]loss = history.history[‘loss’]val_loss = history.history[‘val_loss’] epochs_range = range(500) plt.figure(figsize=(15, 15))plt.subplot(2, 2, 1)plt.plot(epochs_range, acc, label=’Training Accuracy’)plt.plot(epochs_range, val_acc, label=’Validation Accuracy’)plt.legend(loc=’lower right’)plt.title(‘Training and Validation Accuracy’) plt.subplot(2, 2, 2)plt.plot(epochs_range, loss, label=’Training Loss’)plt.plot(epochs_range, val_loss, label=’Validation Loss’)plt.legend(loc=’upper right’)plt.title(‘Training and Validation Loss’)plt.show()

Conclusion

Then, we should characterize our information way. How about we characterize a capacity called get_data() that makes it simpler for us to build our dataset for preparing and approval. We portray the two ‘Rugby’ and ‘Soccer’ names that we use. For this situation, we utilize the Opencv highlight to peruse the pictures in the RGB design and resize the pictures to our ideal width and tallness, the two of which are 224.

We saw that our models misclassified a great deal of pictures, which implies that there is still space for improvement. That is not the end. We could begin by discovering more data or in any event, executing better and fresher designs that could be better at characterizing the highlights. I trust this article has assisted us with understanding picture grouping utilizing Python multiply.

Reference

Abu-Saqer, M.M., Abu-Naser, S.S. and Al-Shawwa, M.O., 2020. Type of Grapefruit Classification Using Deep Learning.

Ismael, S.A.A., Mohammed, A. and Hefny, H., 2020. An enhanced deep learning approach for brain cancer MRI images classification using residual networks. Artificial intelligence in medicine, 102, p.101779.

Ismael, S.A.A., Mohammed, A. and Hefny, H., 2020. An enhanced deep learning approach for brain cancer MRI images classification using residual networks. Artificial intelligence in medicine, 102, p.101779.

Kabanga, E.K. and Kim, C.H., 2017. Malware images classification using convolutional neural network. Journal of Computer and Communications, 6(1), pp.153-158.

Seetha, J. and Raja, S.S., 2018. Brain tumor classification using convolutional neural networks. Biomedical & Pharmacology Journal, 11(3), p.1457.

Talo, M., Baloglu, U.B., Yıldırım, Ö. and Acharya, U.R., 2019. Application of deep transfer learning for automated brain abnormality classification using MR images. Cognitive Systems Research, 54, pp.176-188.

Toğaçar, M., Ergen, B. and Cömert, Z., 2020. BrainMRNet: Brain tumor detection using magnetic resonance images with a novel convolutional neural network model. Medical hypotheses, 134, p.109531.

Treebupachatsakul, T. and Poomrittigul, S., 2019, June. Bacteria classification using image processing and deep learning. In 2019 34th International Technical Conference on Circuits/Systems, Computers and Communications (ITC-CSCC) (pp. 1-3). IEEE.

Know more about UniqueSubmission’s other writing services: