Report Type B: “Explainable AI”(LITERATURE REVIEW)

Abstract

This paper reviews the literature on the challenges in AI/ML systems and usefulness of explainable AI to remove these challenges. From this, it is found that different challenges related to lacking reasoning power, contextual limitation and Internal working of deep learning can be addressed with the use of explainable AI.

dgLARS & Morse-Smale regression are key progressing areas of explainable AI that can be effective in the future to make better interactions. The use of MI systems shows better future opportunities in different areas businesses, security, healthcare, new services, and sustainability.

In this technologically driven environment, machine learning (ML) has a significant impact on the lives of people. Even, different industries are also benefited with the use of machine learning. Machine learning is a type of artificial intelligence that is effective to learn and develop without being programmed to do so.

It is used to analyze the data and provide predictable and probable outcomes (Jiang et al., 2017). This technology is significant to answer several business complexities and enhance the effectiveness of operations and expansions for the businesses.

However, the prospectus for the use of machine learning in future can be said promising and bright, but there are some inherent problems with the use of machine learning and artificial intelligence advancements that may cause disadvantages and limitations for their use (Brynjolfsson & Mitchell, 2017).

This research paper provides the explanation on these challenges and the use of Explainable AI approach to overcome these challenges. At the same time, it also discusses the latest progress in Explainable AI and the potential future of the field and impact on ML.

Challenges of current ML systems

In the views of Seshia et al. (2016), reasoning power is a significant challenge in the use of ML. It is because ML is not effective in terms of the reasoning power, a distinctly human trait. Specific use-cases are the basis of the algorithms used in ML.

So, the ML cannot be effective to think reasonably as to why a particular method is happening that way or ‘introspect’ with their outcomes. For example, an image recognition algorithm is used to identify apples and oranges in a given scenario, but it cannot tell if the apple (or orange) has gone bad or not, or why is that fruit an apple or orange.

It is possible for a human to explain this in a mathematical way, but it is not possible through an algorithmic perspective. In other words, ML algorithms don’t have the ability to reason beyond their intended application (Holzinger, 2016).

However, Brynjolfsson & Mitchell (2017) explain the benefits of AI approach as it can be significant for using transparent, human-like reasoning to solve problems. A team of researchers from MIT Lincoln Laboratory’s Intelligence and Decision Technologies Group has also conducted research on this and created a neural network named as the Transparency by Design Network (TbD- net).

This network is effective to use human-like reasoning steps for addressing the problems about the contents of images. Neural networks are brain-inspired AI systems that are significant to follow the approach like human learns.

The input and output layers and layers in between in these networks are crucial to provide the appropriate outputs, but some deep neural networks cause complexity leading to no applicability of this transformation process (Mosavi & Varkonyi-Koczy, 2017).

So, they are referred to as “black box” systems that don’t make any clarity for the engineers too. At the same time, TbD-net developers also consider that there is no such neural network that could enable humans to understand their reasoning process.

On the other hand, the study of Zhou et al. (2017) reveals that there is a contextual limitation in the use of ML systems because these systems are effective to understand languages by means of text and speech through natural language processing (NLP) algorithms.

These are useful to learn letters, words, sentences or even the syntax, but they do not provide the context of the language. Algorithms are not effective to understand the context of the language used.

Supporting this, L’heureux et al. (2017) affirm that computer programs or algorithms are used to determine the idea merely by ‘symbols’ rather than the context is given. So, it can be stated that there is no idea of the situation in the use of ML.

It is limited by mnemonic interpretations rather than thinking to see what is actually going on. At the same time, Dove et al. (2017) point out that there is lack of proper understanding of the internal working of deep learning which is the sub-field of ML and is accountable for the current growth of artificial intelligence.

Deep learning (DL) is effective to understand different applications such as voice recognition, image recognition, and artificial neural networks. But, the internal working of DL is still unknown and yet to be solved. Similarly, Wuest et al. (2016) depict that the advanced DL algorithms confuse the researchers regarding its working and efficiency. So, deep learning is considered as a black box because its internal agenda is not known.

Explainable AI approach to overcome these challenges

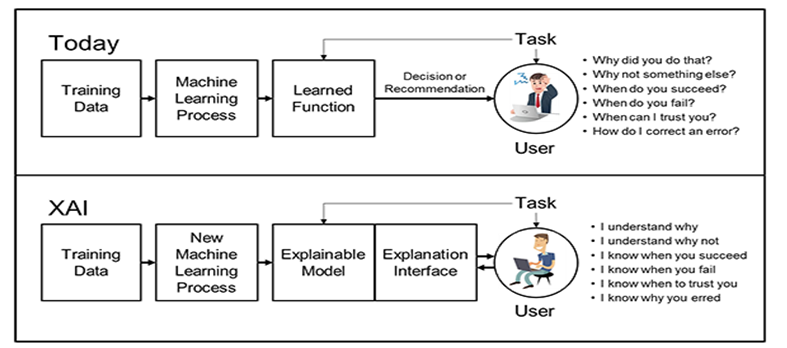

It is necessary to overcome the challenges related to ML systems as the use of Explainable AI (XAI), Interpretable AI, or Transparent AI can be significant to develop trust and easily understand the human reasoning aspects.

It is contradictory to the concept of the “black box” in machine learning in which designers are not able to explain the reason how the ML system arrives at a particular decision (Whitworth & Suthaharan, 2014).

In relation to this technique, ……..claim that XAI is effective to execute the right to explain behind any decision. It may create more explainable models to maintain high leaning and predict accuracy. It can be effective to understand, appropriately trust, and effectively manage the developing artificially intelligent partners.

At the same time, Samek et al. (2017) point out that the use of XAI can be significant to explain their rationale, characterize their strengths and weaknesses, and express an understanding regarding their behavior in the future. These models will be effective to provide understandable and useful explanation dialogues for the end user.

Apart from this, the study of Gunning (2017) recognizes that these machines will understand the context and environment in which they operate and provide the outcomes in relation to the real world phenomena.

The latest progress in Explainable AI

dgLARS is one of the newer algorithms that is based on overlapping tangent spaces of the model. It is very useful when there is a correlation between the predictors. At the same time, Morse-Smale regression is also a new concept in explainable AI that represents an interpretable partitioned regression algorithm (Miller, 2018).

This offers an approach to partition the regression function that is based on a defined function level. On the other hand, Layer-wise Relevance Propagation (LRP) is based on the idea of relevance redistribution and conservation. It works on way backward for identifying the relevance of the inputs and provides meaningful outcomes (Müller & Bostrom, 2016).

Potential future of the field and impact on ML

According to Miller et al. (2017), AI/ML provides a future prospectus in terms of increasing security. For instance, drones based on AI are effective to change the lives of people. These technologies have the ability to transport the things via air over short distances and in complex spaces that provide a future perspective for the upcoming generations.

It can be effective in making package delivery, emergency response and delivering medical products in an emergency that enhances the security aspects of human life. It can also inspect the area where naked eyes cannot observe making the place safer (Jha et al., 2017).

On the other hand, ML or AI systems can also be beneficial in generating new services with the upgrading in existing MI. It may be possible that after some years, it can be possible to see advancement in these systems and their utilization at an unprecedented rate.

The use of MI will be crucial to enhance the efficiency of the organizations as the new AI-based products and services will create a new market in terms of consumers and industry. However, Freedle (2014) argues that besides future opportunities, ML systems can bring some challenges related to privacy, security, inequality, and unemployment within the economy.

At the same time, Russell & Norvig (2016) claim that the use of these systems will be useful to empower the businesses. It is because in the future, the trust barriers regarding the use of AI will decrease and the people will become more dependent on the AI machines.

These techniques will be useful for businesses to deliver extremely personalized solutions to customers. In addition, Müller & Bostrom (2016) stated that the use of AI machines would play an important role in improving healthcare services.

It is because it can access the large data and analyze it to provide better-diagnosed solutions for the patients to make treatment. Besides, the research of Wachter et al. (2017) recognizes that the use of artificial intelligence is useful to facilitate sustainability and handle climate change, environmental issues through better prediction of movement of residents within the cities and areas where the density can be improved.

At the same time, Miller et al. (2017) depict that there will be a proper blend of the lines of digital and physical. It is because the computers systems will react to humans much more intelligently as compared to currently they do. The interactions with these machines will be helpful for humans to get the solutions to their several problems.

Based on the above discussion, it can be concluded that ML systems have different challenges including reasoning power, contextual limitation and Internal working of deep learning.

But at the same time, explainable AI can be effective to solve these challenges by providing reasoning for their decisions. Some progressive aspects of Explainable AI are dgLARS & Morse-Smale regression, which can be used to provide the decisions in a better way with proper explanation.

In addition, it can also be summarized that the use of ML systems provides the future prospectus in terms of increasing security, generating new services (and potentially social issues), empowering businesses and improving healthcare and facilitating sustainability and blending the lines of digital and physical.

Brynjolfsson, E., & Mitchell, T. (2017). What can machine learning do? Workforce implications. Science, 358(6370), 1530-1534.

Dove, G., Halskov, K., Forlizzi, J., & Zimmerman, J. (2017, May). UX design innovation: Challenges for working with machine learning as a design material. In Proceedings of the 2017 chi conference on human factors in computing systems(pp. 278-288). ACM.

Freedle, R. (2014). Artificial intelligence and the future of testing. Psychology Press.

Gunning, D. (2017). Explainable artificial intelligence (xai). Defense Advanced Research Projects Agency (DARPA), nd Web.

Holzinger, A. (2016). Interactive machine learning for health informatics: when do we need the human-in-the-loop?. Brain Informatics, 3(2), 119-131.

Jha, S. K., Bilalovic, J., Jha, A., Patel, N., & Zhang, H. (2017). Renewable energy: Present research and future scope of Artificial Intelligence. Renewable and Sustainable Energy Reviews, 77, 297-317.

Jiang, F., Jiang, Y., Zhi, H., Dong, Y., Li, H., Ma, S., … & Wang, Y. (2017). Artificial intelligence in healthcare: past, present, and future. Stroke and vascular neurology, 2(4), 230-243.

L’heureux, A., Grolinger, K., Elyamany, H. F., & Capretz, M. A. (2017). Machine learning with big data: Challenges and approaches. IEEE Access, 5, 7776-7797.

Miller, T. (2018). Explanation in artificial intelligence: Insights from the social sciences. Artificial Intelligence.

Miller, T., Howe, P., & Sonenberg, L. (2017). Explainable AI: Beware of inmates running the asylum or: How I learnt to stop worrying and love the social and behavioural sciences. arXiv preprint arXiv:1712.00547.

Mosavi, A., & Varkonyi-Koczy, A. R. (2017). Integration of machine learning and optimization for robot learning. In Recent Global Research and Education: Technological Challenges (pp. 349-355). Springer, Cham.

Müller, V. C., & Bostrom, N. (2016). Future progress in artificial intelligence: A survey of expert opinion. In Fundamental issues of artificial intelligence (pp. 555-572). Springer, Cham.

Russell, S. J., & Norvig, P. (2016). Artificial intelligence: a modern approach. Malaysia; Pearson Education Limited,.

Samek, W., Wiegand, T., & Müller, K. R. (2017). Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models. arXiv preprint arXiv:1708.08296.

Seshia, S. A., Sadigh, D., & Sastry, S. S. (2016). Towards verified artificial intelligence. arXiv preprint arXiv:1606.08514.

Wachter, S., Mittelstadt, B., & Floridi, L. (2017). Transparent, explainable, and accountable AI for robotics. Science Robotics, 2(6), eaan6080.

Whitworth, J., & Suthaharan, S. (2014). Security problems and challenges in a machine learning-based hybrid big data processing network systems. ACM SIGMETRICS Performance Evaluation Review, 41(4), 82-85.

Wuest, T., Weimer, D., Irgens, C., & Thoben, K. D. (2016). Machine learning in manufacturing: advantages, challenges, and applications. Production & Manufacturing Research, 4(1), 23-45.

Zhou, L., Pan, S., Wang, J., & Vasilakos, A. V. (2017). Machine learning on big data: Opportunities and challenges. Neurocomputing, 237, 350-361.